writing

A Visual Exploration of Neural Radiance Fields

Apr 2023

Generating new views of a scene from limited input images — novel view synthesis — is hard. Traditional methods force a choice between flexibility and realism. Neural Radiance Fields (NeRF) learn a scene’s 3D structure and appearance directly from sparse 2D images, enabling photorealistic view synthesis. This post explains how NeRFs work and the trade-offs involved.

Volume Rendering

First, some background on 3D object representation. A “volume” spans three spatial dimensions, denoted , with “features” for each point in space (density, texture). Unlike meshes, defined solely by surface structure, volumes capture both surface and interior. This makes volumes natural for semi-transparent objects: clouds, smoke, liquids. For our purposes, the interesting features are color and density .

Rendering a 2D image from a volume means computing “radiance” — the reflection, refraction, and emittance of light from object to observer — to generate an pixel grid.

NeRF uses Ray Marching for volume rendering. Ray Marching constructs rays with origin and direction vectors for each pixel. Sampling along each ray yields color and density .

A transfer function (from classical volume rendering 1) forms an RGBA sample by accumulating from the point closest to the eye to the volume exit. Discrete form:

where , , and is the sampling distance.

The transfer function captures an intuitive idea: objects behind dense or opaque materials contribute less to the final image than those behind transparent materials. Flowers behind a glass vase are more visible than flowers behind an opaque ceramic vase.

The rendering component must be differentiable for gradient descent optimization 2. This allows fine-tuning based on the gap between rendered and target images.

The true innovation lies not in rendering, but in encoding the volume. NeRF addresses two challenges:

-

How can we encode the volume to query any arbitrary point in space? This enables rendering from viewpoints not in the original dataset.

-

How can we account for non-matte surfaces? Matte surfaces scatter light uniformly, but glossy and specular materials have complex reflectance. A NeRF trained on a shiny metal sculpture should capture its specular highlights and reflections.

While differentiable rendering enables optimization, NeRF’s innovation lies in encoding: query-ability at arbitrary points and handling non-matte surfaces.

Volume Representation

Consider a naive approach: voxel grids. Voxel grids discretize 3D space into cubes, storing metadata in an array (material, color, viewpoint-specific features). The challenge: no advantage from natural symmetries, and storage/rendering requirements explode in . Viewpoint-specific reflections and lighting need separate handling in rendering.

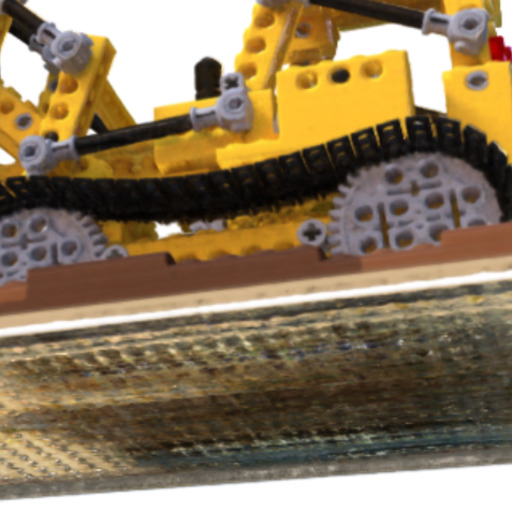

You can get a good feel for the performance impact by increasing the level of granularity in the Sine-wave Voxel Grid. I encourage you to compare this level of detail and fluidity to our NeRF Lego bulldozer.

WebGL is not available in your browser.

Neural Radiance Fields (NeRF)

What is a volume if not a function mapping? Volumes have symmetries we’d like to exploit. Densely connected neural networks with ReLU activations 3 are excellent approximators given sufficient data.

NeRF 4 uses densely connected ReLU networks (8 layers of 256 units) to represent volume. The network outputs for each spatial/viewpoint pair. This implicitly encodes material properties — lighting, reflectance, transparency — normally specified manually. NeRF learns a continuous, high-fidelity scene representation from input images:

Optimizing

To optimize NeRF, a volume renderer generates a 2D image from the learned 3D representation by ray marching and accumulating color/density values. Comparing this to ground truth yields a pixel-squared error loss. Minimizing this loss via gradient descent teaches the model to represent the scene accurately.

NeRF works for novel view synthesis 4, dynamic scenes 5, and large-scale reconstruction 6. Learning high-fidelity, continuous representations from input images makes it powerful for computer vision and graphics.

Challenges

Data Requirements and Training Times

A key challenge: NeRFs need substantial training data. Basic NeRF models have no prior knowledge — each model trains from scratch for each scene.

Data requirements vary with scene complexity and desired quality. A simple uniformly textured object needs fewer training images than a complex outdoor scene with intricate details and varying lighting.

Training a high-quality NeRF takes hours or days, depending on dataset size and compute. The original paper 4 reported 1-2 days for 100-400 images on a single GPU. These training times limit practical applications, especially for large-scale or dynamic scenes.

We can see the impact that has on both training time, and the data requirements in our training simulator.

Special Sauce

The above examples lack the clarity of published NeRFs. Beyond training time, state-of-the-art performance requires a few additional techniques.

Held-out Viewing Directions

Leave viewing direction out of the first 4 layers. This reduces view-dependent (often floating) artifacts that occur when the model prematurely optimizes view-dependent features before learning spatial structure.

Fourier Transforms

The original NeRF doesn’t use raw RGB images and viewpoints as input — it transforms them using Fourier features. Fourier transforms decompose signals into constituent frequencies. Applying Fourier features to input coordinates lets the network capture high-frequency details essential for fine structures and textures.

The precise mechanism to utilise Fourier features is to apply a pre-processing step to transform the position into sinusoidal signals of exponential frequencies.

Densely connected ReLU networks cannot model signals with fine detail or represent spatial/temporal derivatives essential to physical signals. Sitzmann 7 reached a similar realization, though they changed the activation function rather than pre-processing inputs.

Imagine a simple 1D signal with sharp edges and fine details. A standard ReLU MLP would struggle, producing smoothed or blurred output. Fourier feature transformation lets the network capture high-frequency components, representing sharp edges and fine details accurately.

This seemingly arbitrary trick yields substantially better results and removes the tendency to blur images, letting the network capture essential spatial and temporal derivatives.

The stationary property of Fourier feature dot products (Positional Encoding is one type) is essential. Stationarity means statistical properties remain constant across positions. The similarity between two points in transformed space depends only on their relative positions, not absolute locations.

Stationarity lets the ReLU MLP learn weights that capture high-frequency components regardless of position. These learned weights correspond to periodic functions. These functions represent the transformed signal’s high-frequency components.

Hierarchical Sampling

An obvious question about classical volume rendering: why use uniform sampling when most models have large empty spaces (low densities) and diminishing value from samples behind dense objects?

The original NeRF 4 learns two models: a coarse-grained model for density estimates and a fine-grained model identical to the original. The coarse model re-weights fine-grained sampling, producing better results.

Conclusion

You now have an overview of Neural Radiance Fields and how they work. NeRFs offer a flexible, powerful framework for encoding and rendering 3D scenes. The original approach has limitations — substantial data requirements and long training times — but ongoing research addresses these challenges and expands NeRF capabilities. As the field evolves, expect advancements making NeRFs more practical and accessible.

Footnotes

-

Volume rendering

Drebin, R.A., Carpenter, L. and Hanrahan, P., 1988. ACM Siggraph Computer Graphics, Vol 22(4), pp. 65—74. ACM New York, NY, USA. ↩ -

For the full details of how Ray Marching works, I’d recommend 1000 Forms of Bunny’s guide, and you can also see the code used for the TensorFlow renderings which uses a camera projection for acquiring rays and a 64 point linear sample. ↩

-

Rectified linear units improve restricted boltzmann machines

Nair, V., & Hinton, G. E. (2010). In Proceedings of the 27th international conference on machine learning (ICML-10) (pp. 807-814). ↩ -

Nerf: Representing scenes as neural radiance fields for view synthesis

Mildenhall, B., Srinivasan, P.P., Tancik, M., Barron, J.T., Ramamoorthi, R. and Ng, R., 2020. European conference on computer vision, pp. 405—421. ↩ ↩2 ↩3 ↩4 -

D-nerf: Neural radiance fields for dynamic scenes

Pumarola, A., Corona, E., Pons-Moll, G. and Moreno-Noguer, F., 2021. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10318—10327. ↩ -

Nerf in the wild: Neural radiance fields for unconstrained photo collections

Martin-Brualla, R., Radwan, N., Sajjadi, M.S., Barron, J.T., Dosovitskiy, A. and Duckworth, D., 2021. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7210—7219. ↩ -

Implicit neural representations with periodic activation functions

Sitzmann, V., Martel, J., Bergman, A., Lindell, D. and Wetzstein, G., 2020. Advances in Neural Information Processing Systems, Vol 33. ↩