projects

VibReality: Vibrotactile Haptic Gloves

May 2022

Simple vibration-based “haptic” feedback is sufficient to create the illusion of touch. This has been well-established in the field of touchpads with key examples such as Apple’s “Taptic Engine” which creates the illusion of a physical button press from carefully designed vibration waveforms.

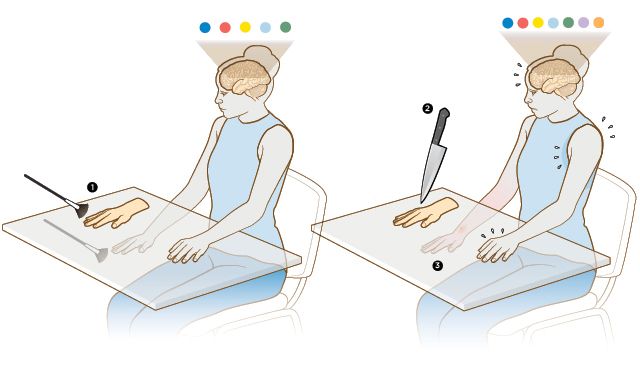

The infamous Rubber Hand Illusion for testing Embodiment uses soft stroking tactile feedback

The most sought-after sense of Embodiment is touch. In Virtual Reality settings, Embodiment is a key factor in enjoyment and immersion. However, traditionally the focus in VR haptics has been on force feedback (for example using motors, reels, capstans, or exoskeletons to control finger positioning); not vibrotactile haptics.

This research focus is not without good reason. Vibrotactile feedback is insufficient to replicate reality. Vibrations have no restraining forces necessary to replicate fixed objects in space. However, symbolic feedback might be sufficient to improve Embodiment (ownership, agency, and self-location) and allow for usable touch-based interfaces in VR.

The project is therefore to create vibrotactile haptic gloves with custom virtual reality-linked waveforms.

Hardware Description

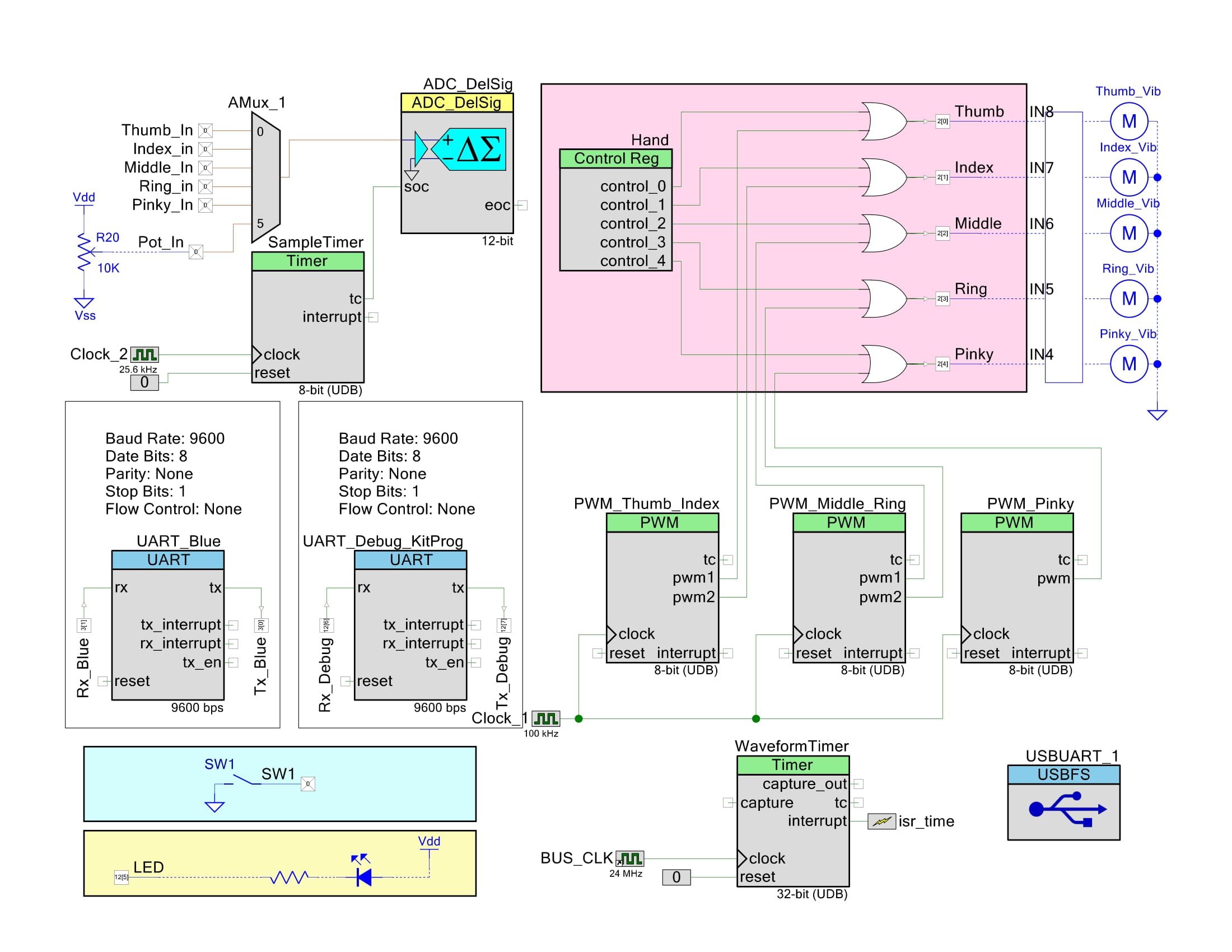

Front Design for PSoC Stick (CY8C5888LTI-LP097) uses as the primary controller

In the system, the PSoC microcontroller is the central hub and processor to connect to 5 ERM tactors. These are referenced via a Darlington Array which allows for higher (500mA) current draw of the multiple tactors.

Each of these tactors is driven by a PWM signal that is modulated over time to provide different waveforms. To do this effectively, we replicated the functionality of commercial Haptic Drivers (DRV2605 / DRV2605).

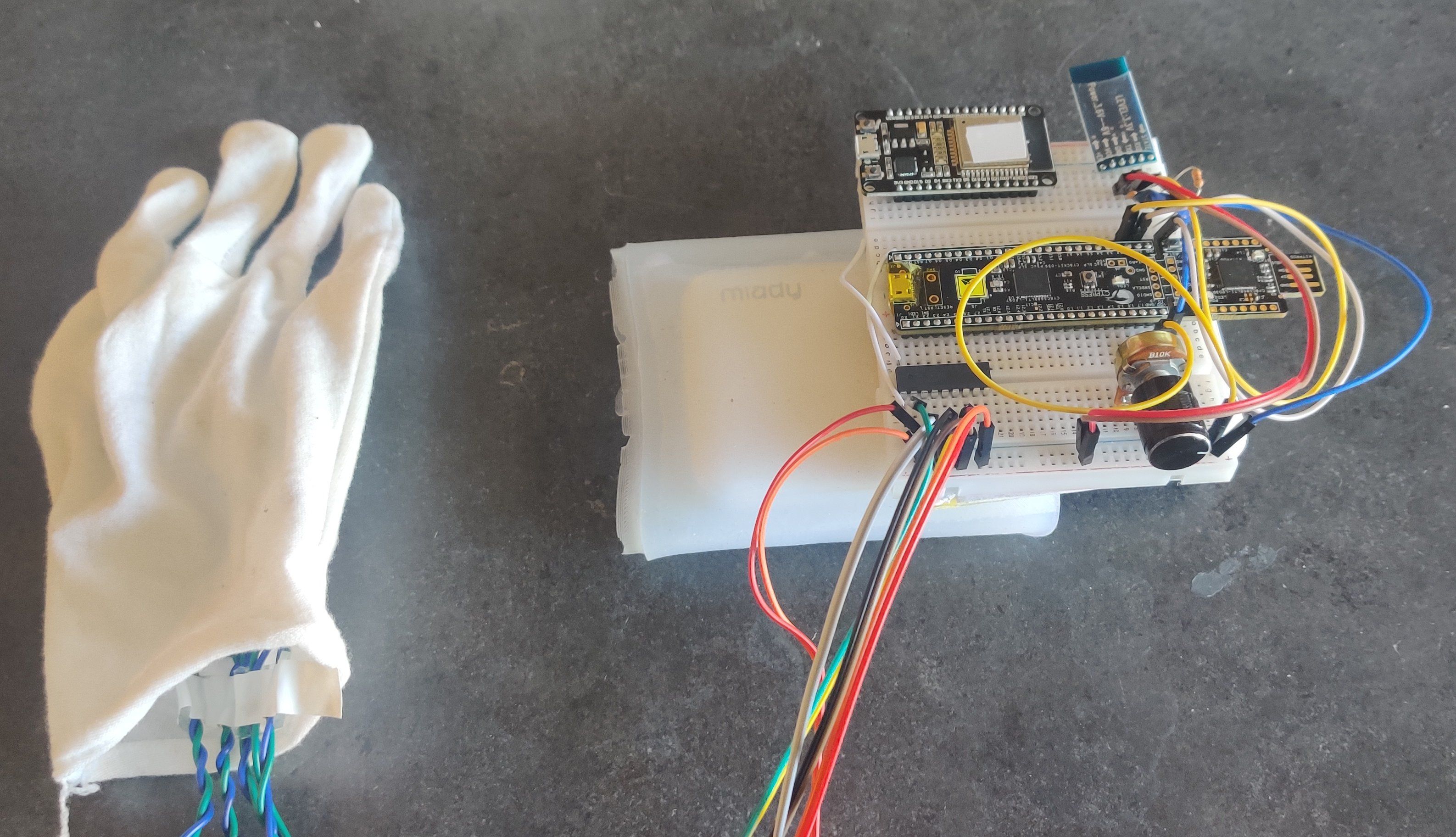

Wireless setup for Haptic Gloves, the battery back forms the basis of the wrist-mounted breadboard. The long wires allow for additional flex when wearing the combined system.

From a hardware perspective, we also use the HC-06 to provide Bluetooth connectivity via a UART connection. This is used to stream “Landmark” hand information from an external provider - for a more detailed analysis of this consider Keyboard Expanse. In this case, we use a machine learning hand tracking model that could not fit on the PSoC alongside a webcam. This landmark information is then used to position the hands within the virtual world.

Present in the design, but not fully utilized was the back-emf sensing to collect tap data. The initial differential voltage-based system was discovered to not work with ERM motor drivers (or rather required additional amplifiers), it seems likely that an amplitude measurement would perform best as it appears that pressure on the vibration motors causes the current draw to increase.

We also use a potentiometer which was used in the initial versions of the design to control maximum values as 5V / 255 vibration could be unpleasant when sustained. Applying different waveforms greatly improved the experience of the haptics.

Software Description

Haptic Animations & Driver

VibReality replicates the DA7282 / DRV2605, with custom waveform sequences by altering the PWM duty cycle to create waveform transitions on top of the PWM frequency (100kHz). The ERM vibration motors have an implicit low-pass operation on the PWM frequency but are still responsive within the bass to midrange frequencies 1 - 2,000Hz.

Using cheap ($2 pp) ERM motors limits the responsiveness to frequency changes, but still allows us to create several waveforms:

This is configurable by a flashable setting. In part because the ERM motor has limited expressiveness for frequency.

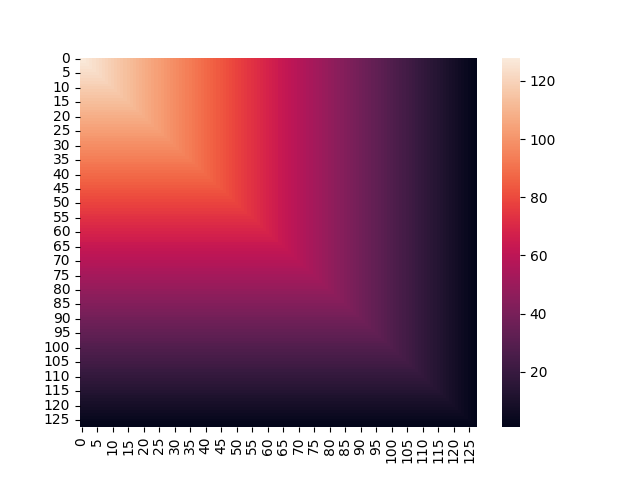

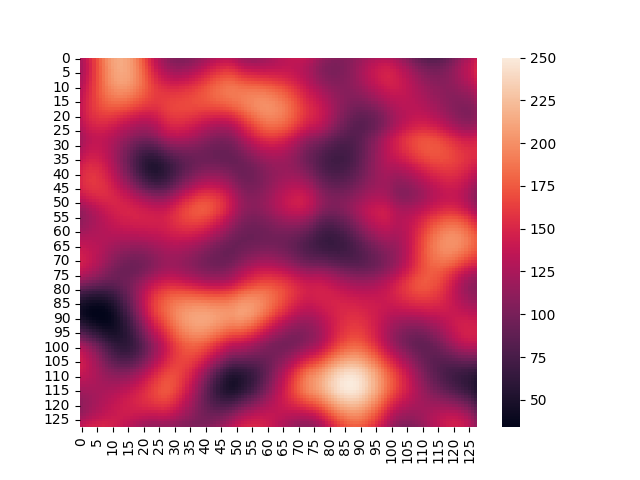

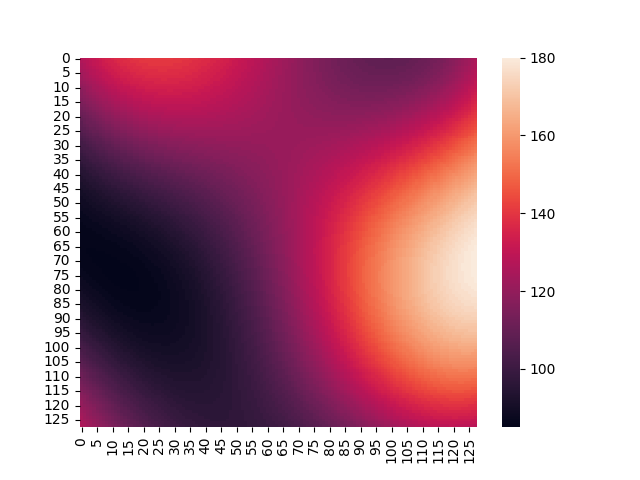

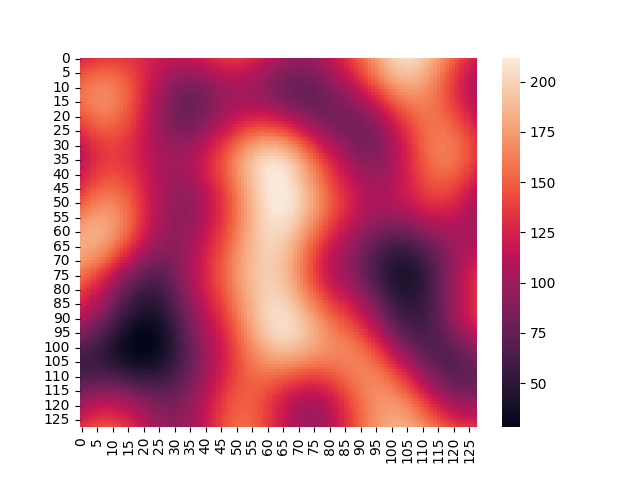

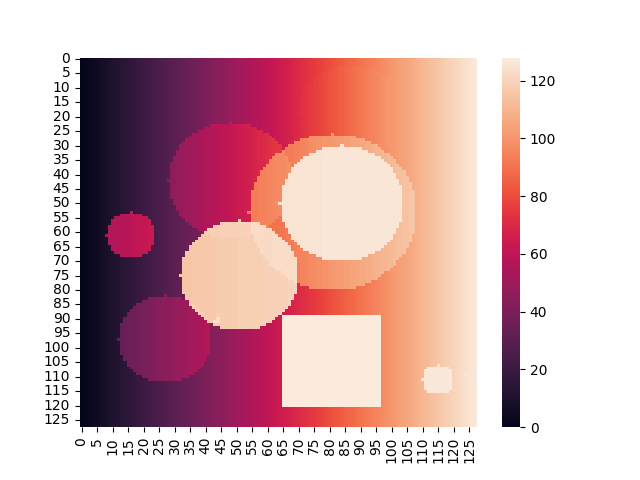

Virtual World Map (Vibration Map)

Our haptic glove will act as a gateway to a hidden, virtual world that can only be experienced by the vibration it exerts on the user’s fingertips. As you move your hand around in space you’ll get the impression of a physical object (e.g. a sphere hanging in space) via the vibrations.

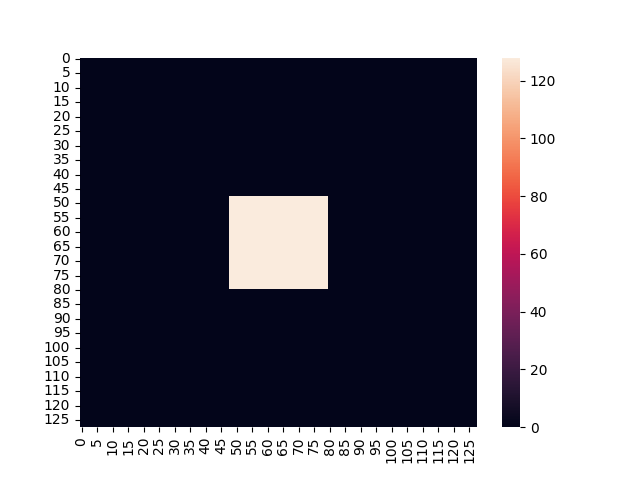

We consider only a 2D grid for this project - doing so allows for the virtual world to be treated as an image-processing task. This simple adjustment allows for the application of graphics utilities to our 2D map.

VibReality uses a 128x128 grid of offset bytes (configurable) due to the limited memory space of the PSoC Stick. In this case: a stack of 2048 bytes and a heap of 128 bytes; ensures that we can store segments in memory to operate on dynamically.

By considering additional “channels” to this “vibration image” we can configure dc offset, simulated frequency, and simulated amplitude, as well as waveform type.

Our virtual world is comprised of various graphics objects:

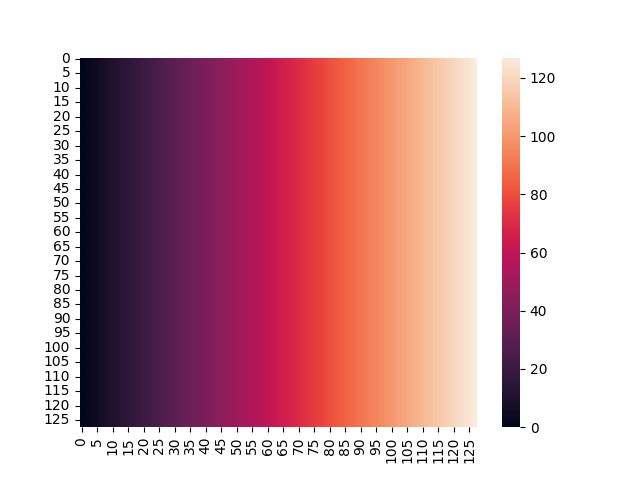

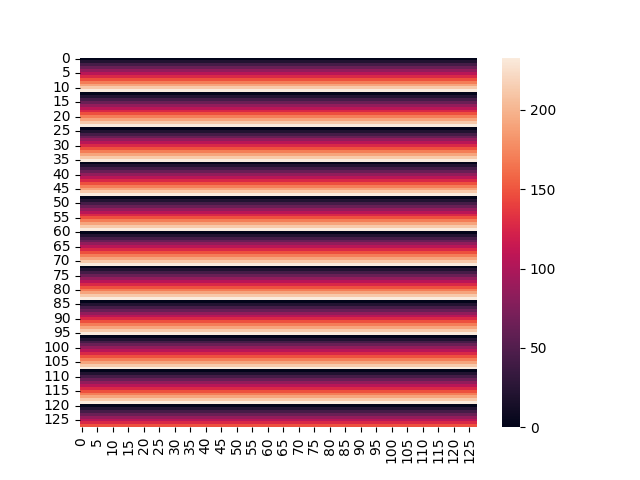

- Swipes: Applying a simple for loop over one axis allows for a gradient swipe

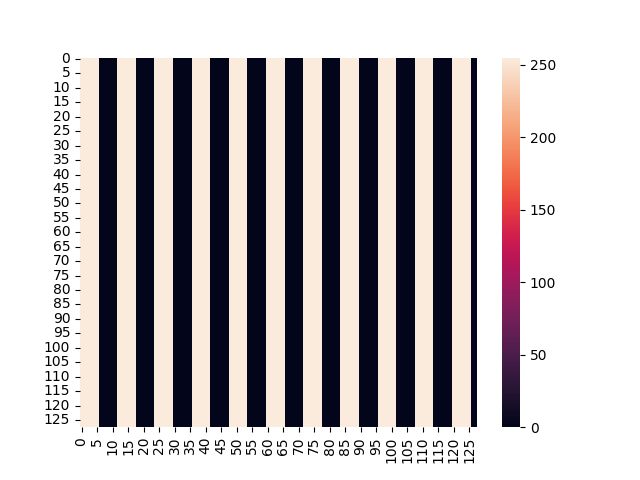

- Stripe: Specifying minimum / maximum values as well as spacing allows for simple stripe patterns.

- Perlin: These are hardcoded environments that are generated, further work would pull the Perlin algorithm on

- Square: Given a top-left coordinate and width and height we can render a square.

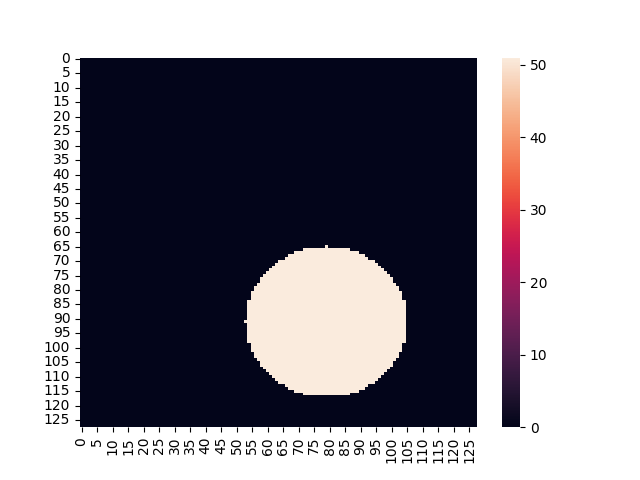

- Circles: By looping over the bounding box of the circle, we can set any point with a valid radius (, )

In addition to rendering graphic objects, we can also compose these objects together:

This allows for rich 2D virtual environments to be expressed.

Waveform Library

Designing haptics is similar to writing music:

- Rhythm: Small fast beats feel urgent. Long pauses create tension.

- Volume/Intensity: Long beats compared to short bursts. Consistent beats are calmer.

- Sharpness / Timbre: Buzz (sawtooth) vs Beats (sin waves)

Standard conventions:

- Descending patterns => Failure

- Ascending patterns => Success

- Flat / Repeating patterns denote progress

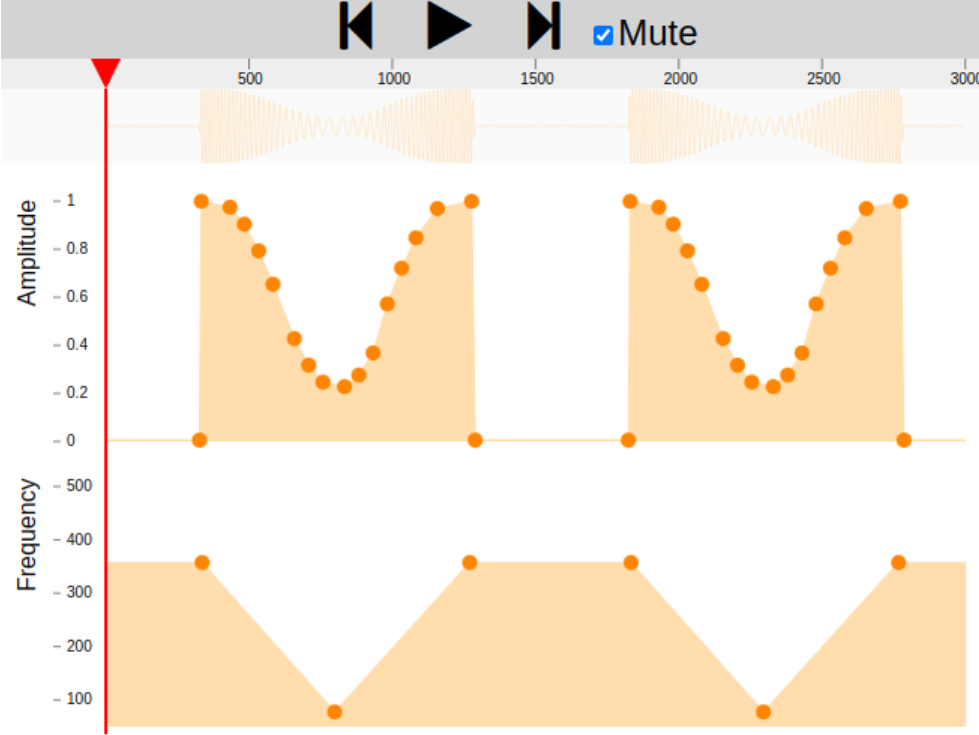

I started to explore how we could encode various patterns by importing a .wav - a convention that is common to some of the literature studying haptics.

Macaron Waveform Editor developed by the SPIN research group at the University of British Columbia (UBC).

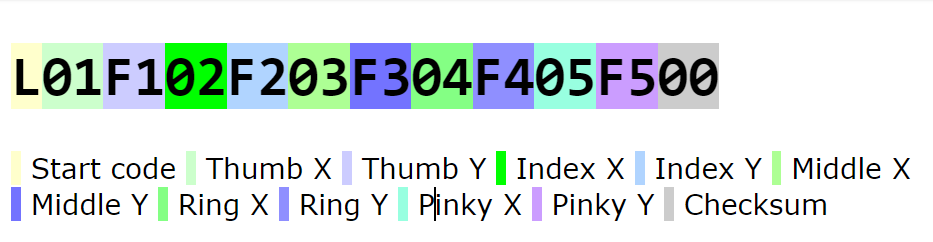

Serialisation & Bluetooth

Serialisation of Landmarks

Bluetooth (via HC-06) & USB were both used in this design as serial, user inputs to the system, as well as debug outputs.

To allow for flexibility there is a built-in Parser that performs both deserialisation and command selection.

For instance:

- V - Prompt of help

- L

<pkt>- Set Landmarks given<pkt>data. - M

<mode>- Set Mode to<mode>. - T

<msg>- Set<msg>for fingers. - PutC:x,y,r - Put Circle at point with radius .

- PutS:x,y,w,h - Put Square with a top left point at with width and height .

- Clear - Clear virtual world.

Conclusion

This project only covers a small part of the opportunity with haptic rendering. Applying the concept of a Virtual World is an abstraction that can allow otherwise simple haptic sensations to create rich textures and vivid sensory images.